Audio Tutorials

Physics Electronic Theory Editing Performance

Digital Audio vs. Analog Recording

Some musicians shy away from embracing digital technology in favor of the traditional analog methods of recording on tape and vinyl. The argument is that digital sampling loses information, which will cause lower integrity music. However, in numerous blind hearing tests, musicians and regular people were not able to tell the difference between an audio recording and a digital recording.

Most recording and mastering artists believe that the recording medium really depends on the type of music. In some cases analog recording sounds better just because everything in the live recording went right and there isn't a need for much additional post-editing. Digital editing can produce more controlled effects when it comes to modifying vocals or getting a specific sound.

The debate extends to whether live performances are superior to the studio cover versions. A lot of artists think the performance has more soul than a recording, while others think the cover should be considered the true version, like the final cut of a film. The question of whether digital editing covers up or detracts from the original vocals and music will likelty remain a debated subject amongst musicians for some time.

AU Review: Dave Grohl talks Analog vs Digital

Music and Technology

Music is always changing. Recent music genres like EDM, Remix, Dubstep, Techno, Pop, and Experimental have supporters and detractors, but digital technology as a whole has had a major impact on the way people experience music.

The technology and tools available to artists are constantly expanding. The internet has brought greater attention to indie, underground, and alternative music. Venues and websites have opened up to support unknown artists. Resources were never as readily available in the past as they are today. Digital streaming has completely changed the way people listen to music. Music can be downloaded or shared. Online sharing enables bands to reach out to an unprecedented audience of millions. The role of visual performance is arguably as much a part of the music industry today as the audio thanks to music videos and concert recordings.

While there will always be a place for traditional and classic music genres, the influence of digital technology should not be written off as a passing trend in the music field. Digital editing is a creative tool for new kinds of expression that should be judged like any other brand of music on the merits of the artists, not the medium.

The Atlantic: Buy the Hype: Why Electronic Dance Music Really Could Be the New Rock

Kaptain Kristian: Gorillaz - Deconstructing Genre

TED Talks: Mark Applebaum- The mad scientist of music

Waves

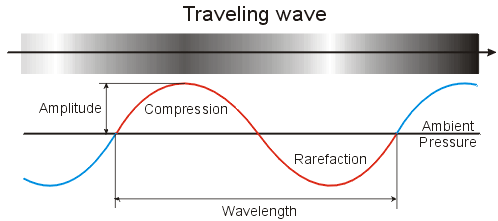

Sound is a form of vibrational energy that is transferred through a medium like air in the form of wavelengths. Humans can only hear sounds in the range of 20-20,000 Hertz. Sound can travel through liquids and gases. An oscilloscope is used to detect soundwaves. Amplitude denotes the peak height of a waveform.

Air pressure can play a role in how sound travels. The less air pressure, the harder it is for sound to travel, so the loudness will decrease. Sound cannot be heard in a vacuum because atoms are too few and spread too far apart.

Shock waves are produced when an object travels faster than the speed of sound at 1,225 km/h (761 mph). This is because the speeding object causes air pressure to rapidly compress in the wake, creating a sonic boom.

Veritasium: Pyro Board: 2D Rubens' Tube!

CYMATICS: Science Vs. Music - Nigel Stanford

Pitch vs. Tempo

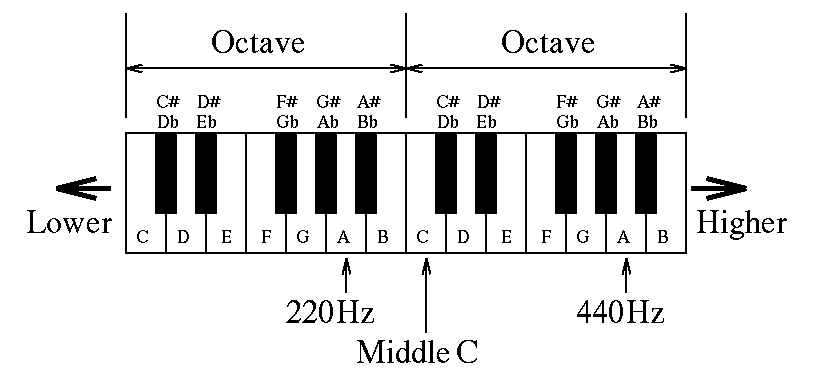

Pitch refers to the highness or lowness of sound. Frequency is how many times a wavelength loops in a set amount of time. 1 Hertz = 1 vibration/second. The higher the frequency, the higher the pitch.

Bit Rate and Sampling Rate

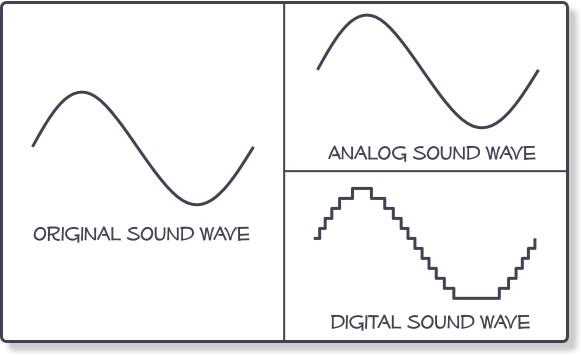

Digital audio cannot be stored on a computer as an unterupted wavelength. Instead, the track is sampled and the information gets converted into bits. This results in staggered breaks in the wave. Digital audio is sampled at a rate of 44.1kHz, or 44,100 times per second, so the breaks are very very small and barely noticeable to the human ear.

Bit Depth

The more times the computer can sample bits per second, the higher quality the video or audio will be. Audio uses a standard called Pulse-Code Modulation (PCM). Audio file bit depth can range from 4-64 bits ber sample. 4 bits is just awful, so make sure never to use it. 8 bit sound is basically the quality of old arcade and Nintendo Gameboy music. 16-24 bits is the modern standard. Higher bit depth will result in much larger file sizes, and requires more advanced recording equipment.

Over time, resampling a video or audio file will cause degradation as more information is lost each subsequent sampling. In extreme cases, this can result in corruptions and glitches.

Video Data Degradation caused by resampling 1,000 times

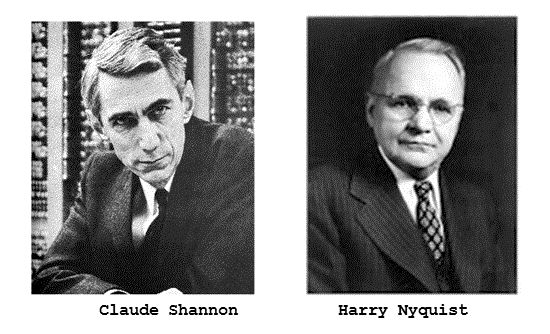

Nyquist-Shannon Sampling Theorem

This is a conversion formula that states when taking an analog audio sample, in order to get the best quality sound from sampling, the sampling rate should be twice as much as the highest frequency in the original sample. The Nyquist Frequency is set at 22.05 kHz for CD audio, so 44.1 kHz is the standard sampling rate.

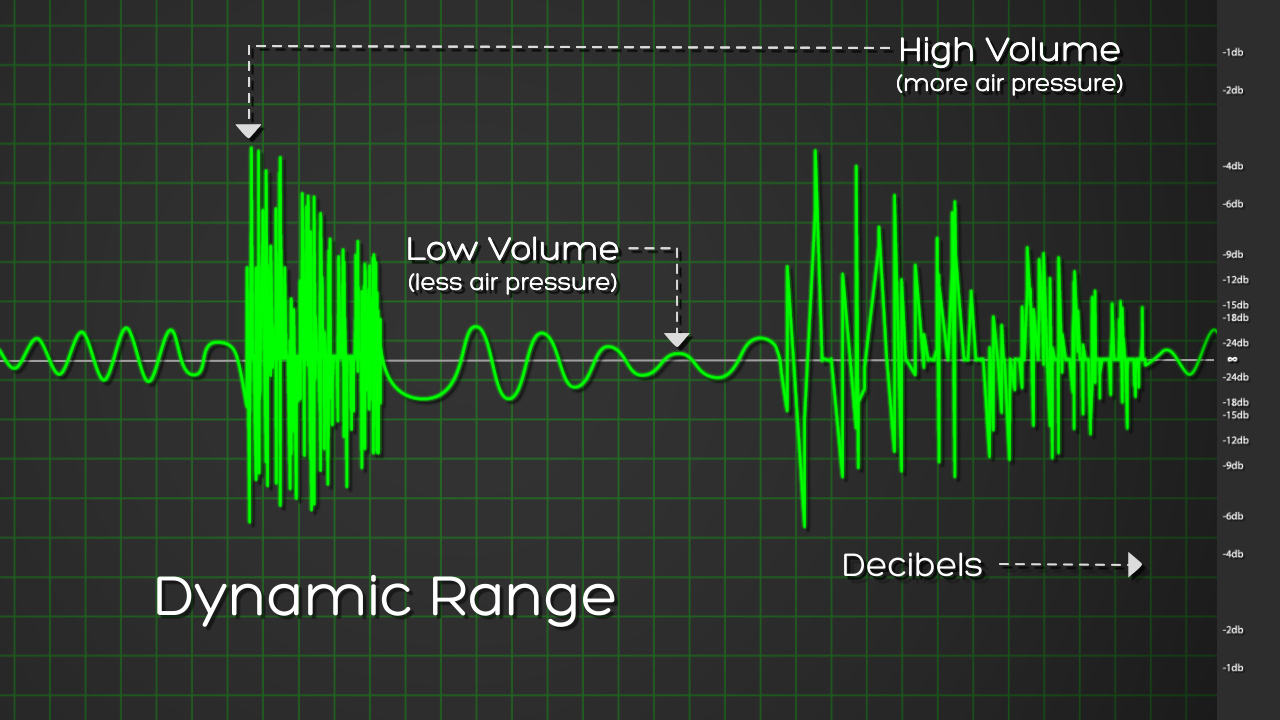

Dynamic Range

Dynamic Range refers to the difference between the maximum and minimum values, measured in decibels. Humans have a hearing range of about 140dB. Technology isn't organic, so it has less flexibility. 96-120 dB is the general range for audio with 16-24 bit depth. The minimum value is called the noise floor. It is not really possible for most audio equipment to exceed 129 dB of dynamic range. Distortion tends to occur the higher the dynamic range is.

Artifacts, Noise, Dithering, and Aliasing

Sound doesn't always record perfectly. Sometimes there are background noises or ragged audio. Sound clips may have been poorly edited, resulting in cut off sounds and artifacts. One common feature is recording extra audio levels that are out of the human hearing range. This can mess up the averaging calculations when sampling, producing false values called aliasing. Luckily, it can be cut out fairly easily using anti-aliasing filters.

Dithering is a mathematical preset applied before sampling. Dithering adds noise that will help average out values better, producing less distortion when sampling at a lower bit rate.

Jonathan Clark: Nyquist, Fletcher-Munson Curves, Anti-Aliasing, Quantization Noise, and Dithering

Resonance, Reverberation

Resonance occurs when sound vibration causes another object to oscilate. It generally takes very high concentrated energy to cause resonance. Resonance is measured in Decibels, a unit that denotes relative power or intensity. Prolonged exposure to noise over 80 decibels can cause damage to hearing. Most rock concerts and loud headphone music enter the 100-120 decibel range. 120 dB is the pain threshold for noise. Gunshots, fireworks, and explosions are around 140 decibels. 150 Decibels is about the limit for when human eardrums will start to rupture.

Jamie Vendera: Can You Shatter Glass With Your Voice?

Echo

Sound waves can reflect off solid objects that they cannot penetrate. Sonar and echolocation rely on this principle. In general, most sound designers and musicians do not want uncontrolled echo effects. Baffles can be used to soundproof recording studios, but echoes can also be reduced or removed in post.

Nancied: Remove echo from video using Audition

Audio File Types

.WAV, .AIFF, and .RAW files are uncompressed files, meaning they contain all the recoded data. These types of crude files use a lot of memory and are not very good for online formats. .WAV and .AIFF can be converted to smaller lossy file sizes. .WAV is the standard audio format on some PCs.

.MP3 files are the most common standard compressed file format for audio. They are lossy, which means some information is lost when converting to this file type, but it is generally not enough to impact sound quality. Lossy files should ideally not be re-edited a large number of times or more significant quality degradation may occur.

.AAC or .MP4 is an updated version of the .MP3 format.

.OGG is a lesser known file type that is sometimes used with HTML 5

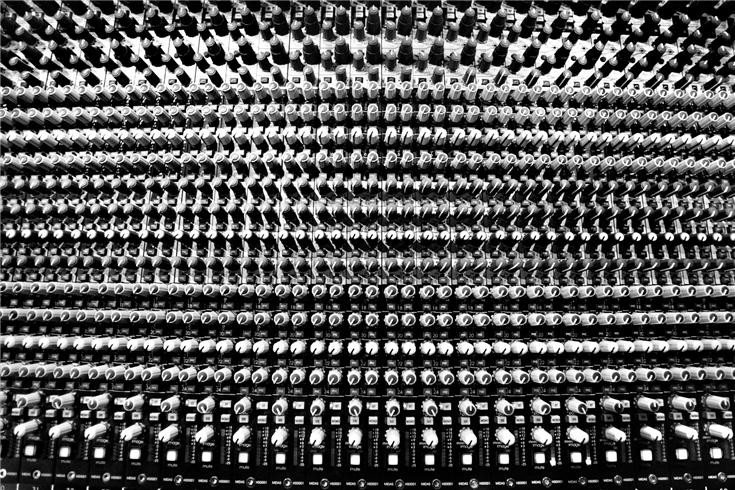

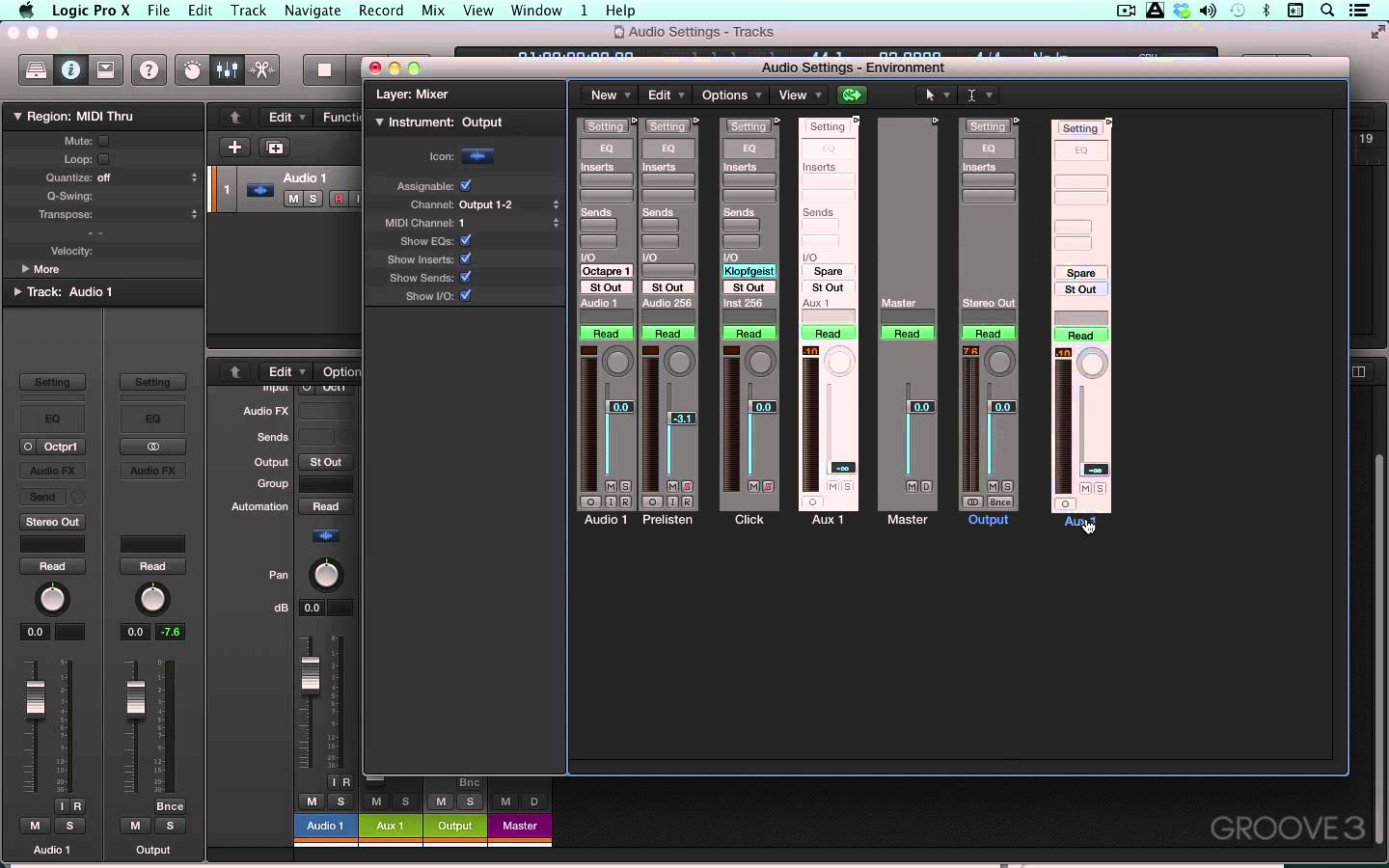

Soundboards

Soundboards have a ridiculous number of buttons and switches. Most of these dials are simple gain and volume adjustment knobs. Each row performs the same effect across the board, so the reality is you only need to memorize the controls for one column. Faders are the slider things located at the bottom of the board that can increase the gain (volume) of fade out.

The hardest part of operating a soundboard is assigning channels. A channel is simply the controller for one particular instrument or sound effect. Every column of buttons represents a separate channel that should correlate with whatever cord is plugged in at the top. Each channel should have an input and output cable socket.

Some soundboards control rerecorded sound effects and music tracks programmed into specific channels. These can be activated whenever the master channel switch is flipped on or turned off to switch to a different track. Timed effects are also possible.

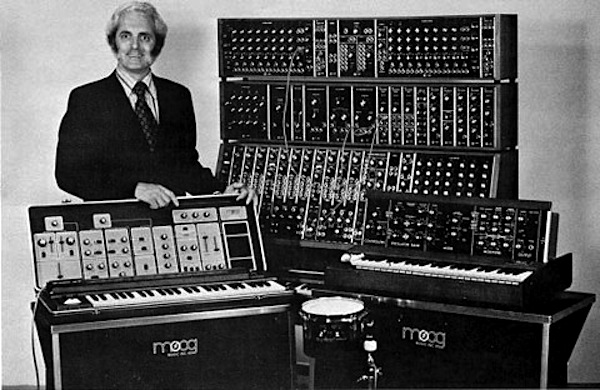

Synthesizers

Electronic synthesizers are used to generate distorted synthetic sound effects. The sounds are distorted using modulation, oscillation, amplification, and other types of filters that stretch and alter the waveforms. Early synthesizers used vacuum tubes to change soundwaves by adjusting the air pressure. Most synthesizers are played using a keyboard, but it is possible to use other instruments or human voices as well. Modern synthesizers are extremely versatile in the number of preset tracks they can store and the range of sounds they can produce.

Herbie Hancock: Rockit

Kraft Music: Korg KingKORG Synth Demo at NAMM 2013

Theremins

Theremins are really weird. They are one of the only instruments in the world that can be played without any physical contact from the musician. Radio frequency oscillations generate sound based on the distance of the musician's hands away from the antennae. The tall antenna is used to control pitch, while the other loop controls amplitude.

Clara Rockwell plays "The Swan" (Saint-Saëns)

Over the Rainbow

MIDI Controller

A MIDI is a Musical Instrument Digital Interface. It plugs into a computer to allow more direct audio control than a mouse. A MIDI typically has 16 channels that can be hooked up to other instruments and equipment. Built-in keyboards are fairly common in MIDIs, but there are other variations as well.

Stereophonic Sound

Stereo sound is directed from two sources, such as loudspeakers or headphones. Stereo sound more natural to humans than mono sound tracks because we have two ears placed on both sides of the head. Monoscopic sound tends to sound flater and muffled. Stereoscopic sound is stored digitally as two channels played simultaneously. One track is usually recorded with a second microphone a slight distance away from the other, which will create a slight difference in audio levels.

Quadraphonic or surround sound creates the richest quality of sound coming from four directions, but it takes more effort to record four different tracks, so stereo is the market standard.

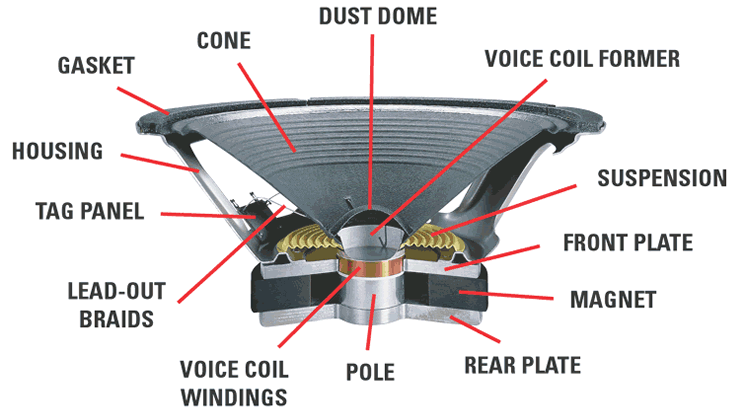

Loudspeakers

Loudspeakers use solid state technology, which makes them fairly simple to build and maintain. Speakers work by amplifying sound vibrations. An electromagnet moves a coil attached to the lightweight speaker cone, which sends out energy in the form of joules. The amount of sound energy can be increased with a resonating case built out of wood or plastic.

A larger loudspeaker size does not automatically make the speaker volume louder. Sensitivity is the most important factor in how well a speaker will work. The higher the sensitivity, the more efficiently the speaker will convert watts of electricity into projected decibels distance.

ExplainThatStuff: Loudspeakers

Subwoofer

Subwoofers are loudspeakers built to handle the lowest-pitch bass frequencies. They are a requirement for any electronic music shows that involve the phrase "drop the bass." Other speakers are not built to handle the large fluctuations in air pressure and vibrations produced at the bass level, which can cause the equipment to blow out. Subwoofers are generally much larger than other speakers and have a reinforced lining to compensate for the powerful soundwaves. It is recommended to not stand directly next to these speakers at concerts if you value your eardrums.

Polyphonic Sound

Polyphonic sound is when multiple sounds play simultaneously. A band, chorus, orchestra, or a capella group could be loosely considered polyphonic. In terms of electronic music, audio editing software makes it possible to combine sounds from multiple sources and play multiple tracks at once.

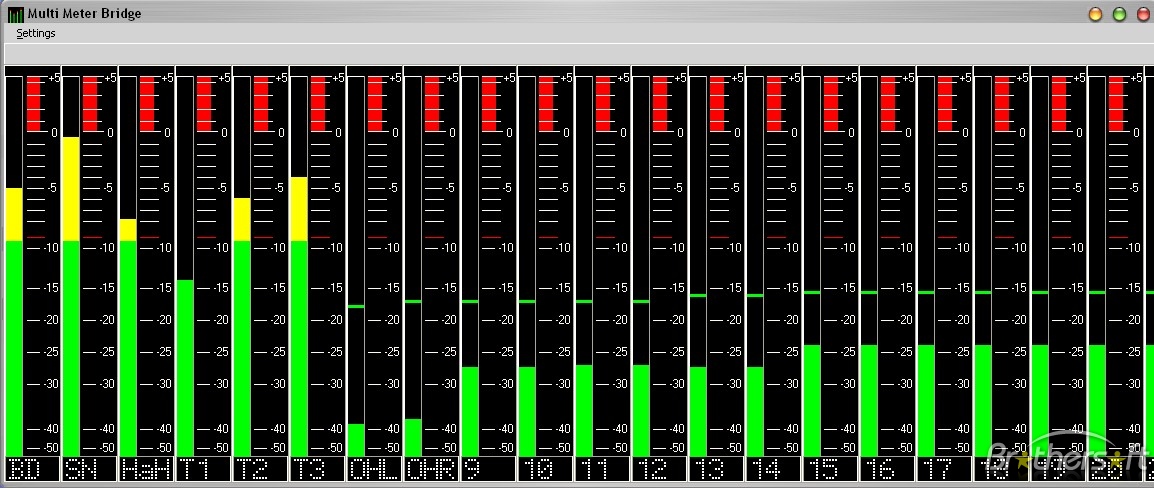

Levels

Avoid setting sound levels too low or too high. Anything that goes over 0 into the red zone is too loud. A mid range of -10 to -14db is an ideal average level for audio. If the audio has multiple tracks, sound effects can be a little bit lower, while dialogue should be higher than a background music soundtrack.

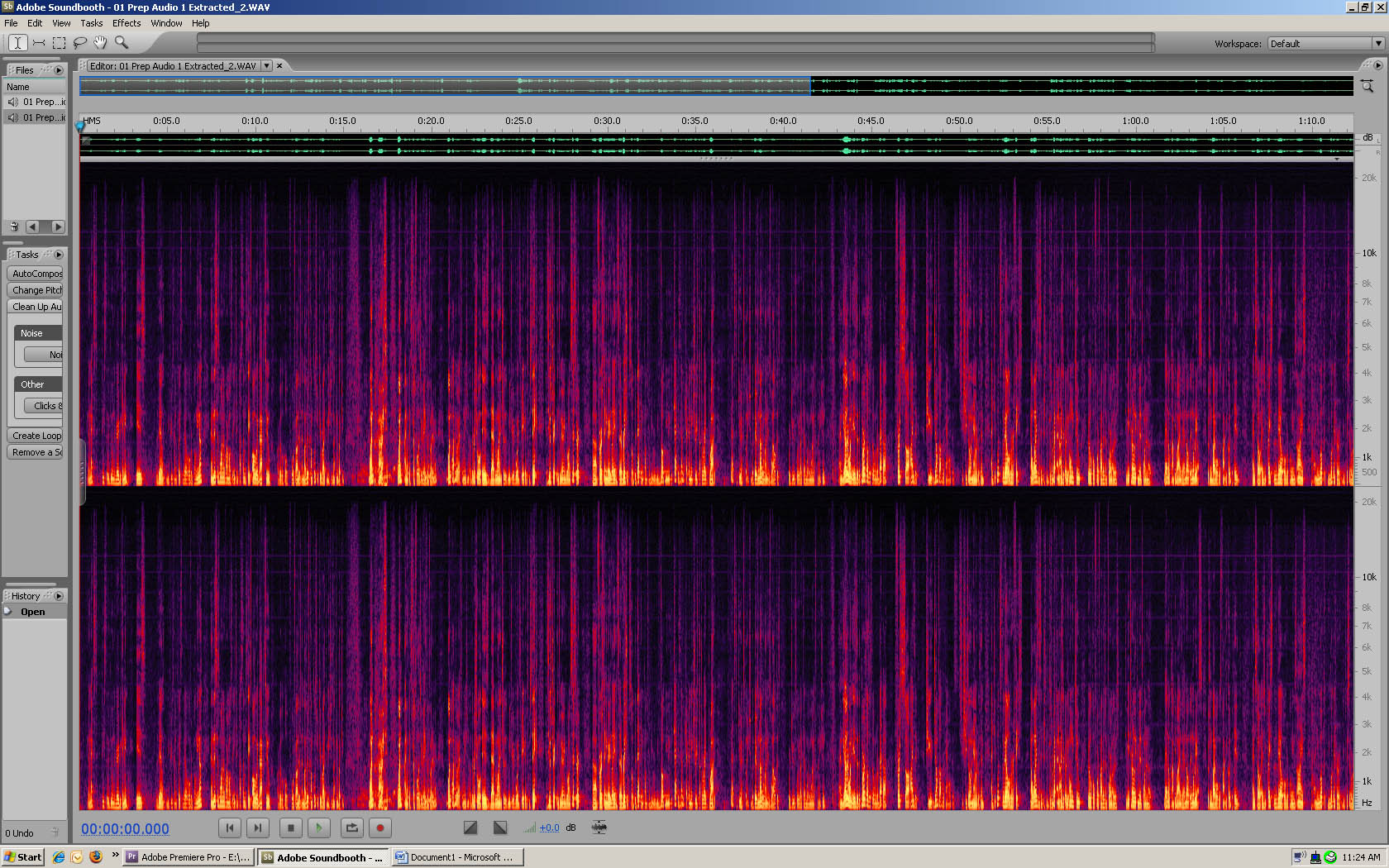

What editing software to use?

A Digital Audio Workstation (DAW) is needed to work with various audio programs, but there are free tools available as well. Ultimately a program doesn't determine how good your music will sound. It all comes down to personal preference and how well you know your own music.

As long as the original audio is recorded properly and you export at the correct sampling rate and file type, your audio should turn out fine. Just be aware that the more the waveforms are altered from their original state, the more distortion is likely to occur. It is best to try and record as close to what you want your music to sound like as possible, and then there will be less post-work to do. Changing volume and overlapping multiple tracks is usually ok, but adding effects or changing pitch and tempo will sometimes cause undesireable sound distortion.

Also keep in mind that computer speakers and headphones can make the music sound very different than how it will play on loudspeakers or on different computers. It is a good idea to check on multiple devices to ensure the final sound plays how you want it to.

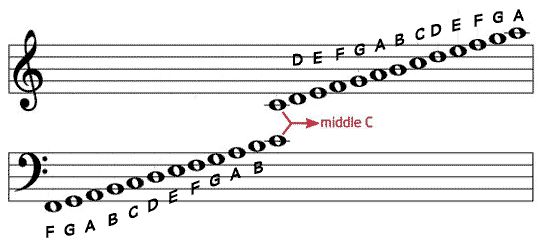

Scales Notation

Music notes represent different pitches that are labeled using letters of the alphabet. The standard C Major scale starts with C and ends with C. If that's as confusing as it sounds, the notes are equivalent to the "do re mi fa so la ti do" thing from The Sound of Music.

Sheet music is split into two bars of five lines. Each bar is called a staff. The big squiggle thing on the upper bar is called the Treble Clef, while the lower squiggle is the Bass Clef. Together, these form the Grand Staff. Due to things like sharp notes and flat notes, there are 12 pitches total in a scale, each pitch being set one semitone or half-step up on the bars.

Michael New: Major and Minor Keys (Music Theory)

The Sound of Music

Notes

Notes are placed on along Grand Staff at the height correlating to the pitch of the note that should be played. The timing of notes is indicated by the type of note. A whole note is a full length, while a quarter note is short and fast.

Notes have alternate versions where a note is lowered or raised by one. A flat note is one key lower, while a sharp note is one key higher than normal.

Octave

An octave represents the 8-key interval between a note's pitch and one that is double or half of that. In simpler terms, going up or down an octave indicates a significant tonal shift in the music. On a piano, an octave would measure the span from C D E F G A B C.

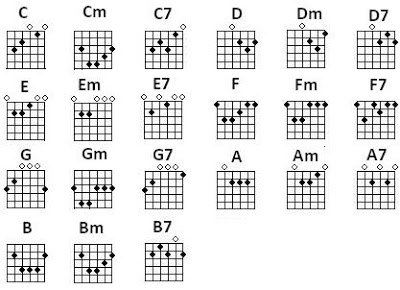

Chords and Melody

A chord is a series of notes played simultaneously, usually in a group of three. Chords can be strung together in a large variety of combinations. A melody is the musical succession of notes and chords.

Vocal Classification

Human vocals are classified differently from the musical scale. The highest vocal register for women is Soprano, Mezzo-Soprano is mid-range, and the lowest is Contralto. For males, Countertenor is the highest register, then Tenor, Baritone, with Bass being the lowest.

Music Structure and Aesthetics

Rhythm is the recurring beat structure throughout a musical segment. Tempo refers to the speed of the beats per minute. Meter is the pattern of stressed accents and unstressed notes, denoted by a time structure on the scale.

Musical aesthetics are a little vague and unspecifically defined, but the terms are used to describe how music sounds. Timbre refers to the aesthetic quality or feel of the music. Harmony is how pleasing the combined instrumentals and vocals sound as a composition. Dynamics refers to how soft or loud the music is. Texture describes the quality of how the instrumentals join together with the melody and other elements.

Fantasia: Toccata in Fugue in D Minor

Music and the Brain

Even though listening to Mozart as a baby doesn't actually make people grow up to be smarter, music has been shown in scientific studies to have many interesting effects on the brain. Music is believed to stimulate creativity and improve reasoning skills, and it has the ability to impact moods and behavior. A lot of theories have yet to be definitively proven, but as more research is done in the field of neurology, psychology, and music theory, new relationships will likely be discovered.

BufferApp: 8 Surprising Ways Music Affects and Benefits our Brains

Bored Panda: I See Music Because I Have Synesthesia, So I Decided To Paint What I Hear

Improvisation

Creating beats on the fly is fairly advanced, even for experienced musicians. Electronic Deep House music has a strong emphasis on live performances, and every performance often sounds very different due to the way the mixer or band will randomly throw in different effects.

Sampling

Sampling is a staple of digital remixing. It is also highly disputed. Sampling can refer to taking snippets of sound files online, or it can refer to how short samples of other exiting songs are taken and reworked into a new composition. Usually the extent of borrowing only applies to a certain beat, with a lot of modification and original material added in. However, if a sampled beat is compared compared directly to its source, the similarities may be noticeable. Borrowing ideas and seeking to improve on them is nothing new in history, but a lot of musicians don't see it that way.

The Verge: The Weeknd is being sued for sampling part of 'The Hills'

Rolling Stone: Led Zeppelin 'Stairway' Verdict: Inside the Courtroom on Trial's Last Day

TED: Mark Ronson- How sampling transformed music

Setting up Live Performances and Recording Sessions

Bands have to be able to set up equipment quickly, and pack things up just as quickly afterwards. Venue typically have busy schedules, so reservations have a short turnaround. It is always best to bring spare equipment in case of an emergency, like the amp exploding and accidentally catching the drum set on fire. Having a manager helps stay organized. Invite friends so the audience is populated with groupies who will clap even if the performance is terrible.

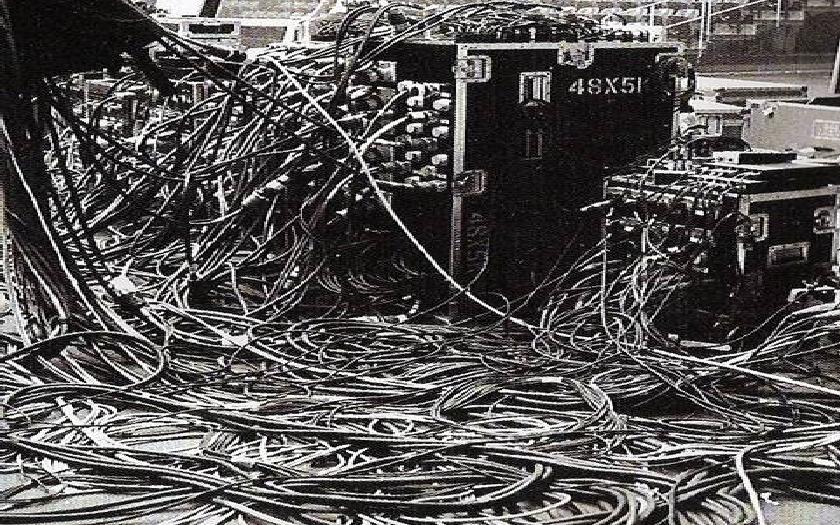

Cables

Keeping track of the different functions of audio cables can be a nightmare, especially at venues that don't have enough power outlets. DMX, MIDI, XLR, TRS, the dreaded banana plug... Why are there so many connectors?

Stage music generally consists of multiple sound sources: the band members and instruments. These sounds are passed through microphones and amplifiers, which are in turn connected to power outlets and a soundboard or mixer. The soundboard is designed to allow fine adjustment controls for each instrument seperately, so that volume can be raised for the bassist without affecting the volume of the lead singer. Cables running from the stage equipment are assigned separate input and output channels on the soundboard. The power cables are always kept separate from the audio cables to avoid blowing out the entire chain of connected equipment. It may seem unnecessarily complex, but all the weird cables serve a purpose of adding more control over the recording process.

SonicBids: The Quick and Dirty Guide to Cables for Musicians

E-Home Recording Studio: The Ultimate Guide to Audio Cables for Home Recording

Recognition

It is notoriously difficult to secure a record deal or make it into the profitable part of the music industry. Most bands will never gain fame or fortune doing what they love. Your band can still enjoy making music at smaller venues and sharing work online. The more you attempt to network and travel to events, the more likely you will build up a fanbase and meet other artists who can help out. Collaborations are a good way to establish good relations in the industry.

Be creative with what resources you have. Most bands can't afford pyrotechnic displays or laser light shows, but a big budget is hardly the only way to get attention. A little talent and originality can go a long way.

Somebody That I Used to Know - Walk off the Earth

Crystallize - Lindsey Stirling

Advanced Tutorials- Hopefully Coming Soon

Video

Harmonic Wave

Doppler Effect

Equilization

Pitch Shift

Modal

Diatonic

Auto-Tune

Phaser

Filters

Wah-Wah

ADR